Yichen PengI am a research assistant professor at the Koike Lab, Institute of Science Tokyo (ex. Tokyo Institute of Technology), working with Prof. Hideki Koike, and Prof. Ewrin Wu. I am also a visiting scholar at Keio University working with Prof. Kai Kunze. Currently, I am visiting KLab, advised by Prof. Kris Kitani, Carnegie Mellon University. My research focuses on computer vision, human motion understanding, and multimodal generative models, with applications in skill training and human motion generation. I received my Master and Ph.D. in Computer Science from the Japan Advanced Institute of Science and Technology (JAIST) in 2021, 2024 respectively, supervised by Prof. Kazunori Miyata. (Funded by JST-SPRING) I worked closely with Prof. Haoran Xie, and Prof. Tsukasa Fukasato in Waseda University, on sketch-based 2D/3D Generation & Interface. GitHub / Google Scholar / LinkedIn / CV Email: yichen.p.8896[at]m.isct.ac.jp |

|

Research InterestsMy research focuses on computer vision, human motion understanding (Pose Estimation/Motion Capture), and multimodal generative models, with applications in skill training(Piano/Skiing/Golf) and human motion generation (Speech/Conversation Gesture). I am also interested in sketch-based 2D/3D Generation & Interface. |

Publications |

|

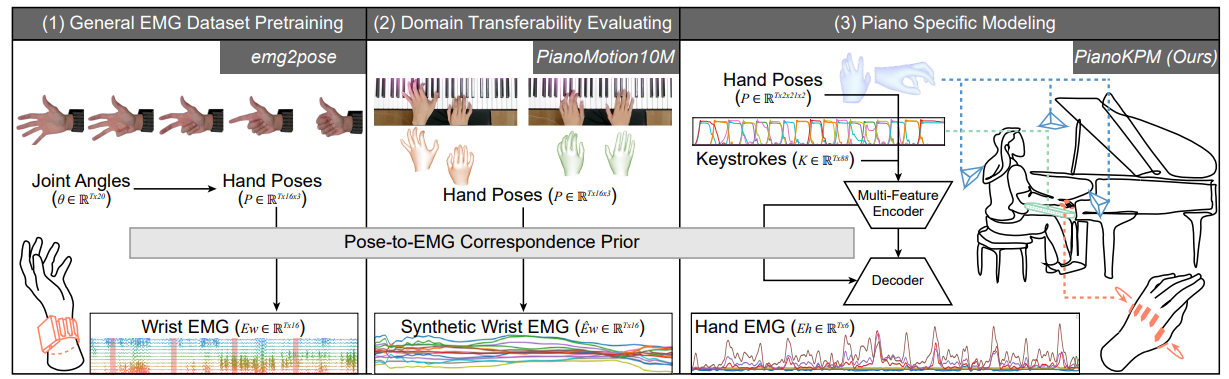

From Pose to Muscle: Multimodal Learning for Piano Hand Muscle ElectromyographyRuofan Liu, Yichen Peng, Takanori Oku, Chen-Chieh Liao, Erwin Wu, Shinichi Furuya, Hideki Koike Conference on Neural Information Processing Systems (NeurIPS 2025), 2025 paper / code / news / |

|

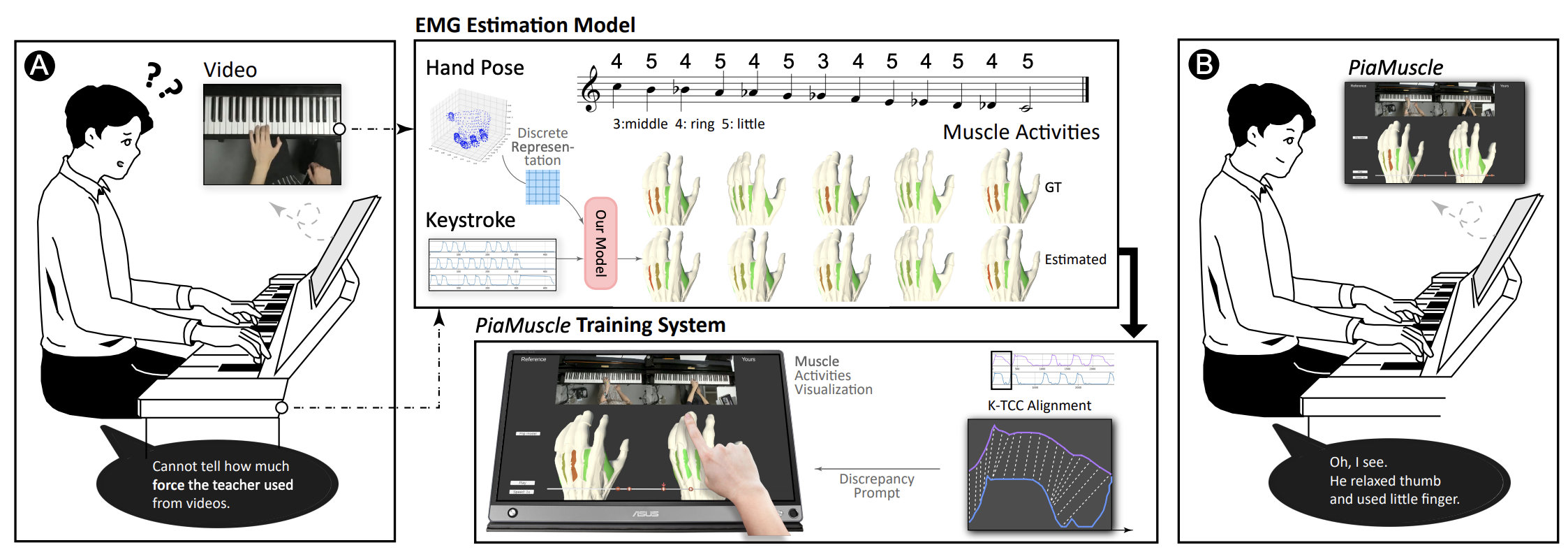

PiaMuscle: Improving Piano Skill Acquisition by Cost-effectively Estimating and Visualizing Activities of Miniature Hand MusclesRuofan Liu, Yichen Peng, Takanori Oku, Chen-Chieh Liao, Erwin Wu, Shinichi Furuya, Hideki Koike Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (CHI), 2025 paper / |

|

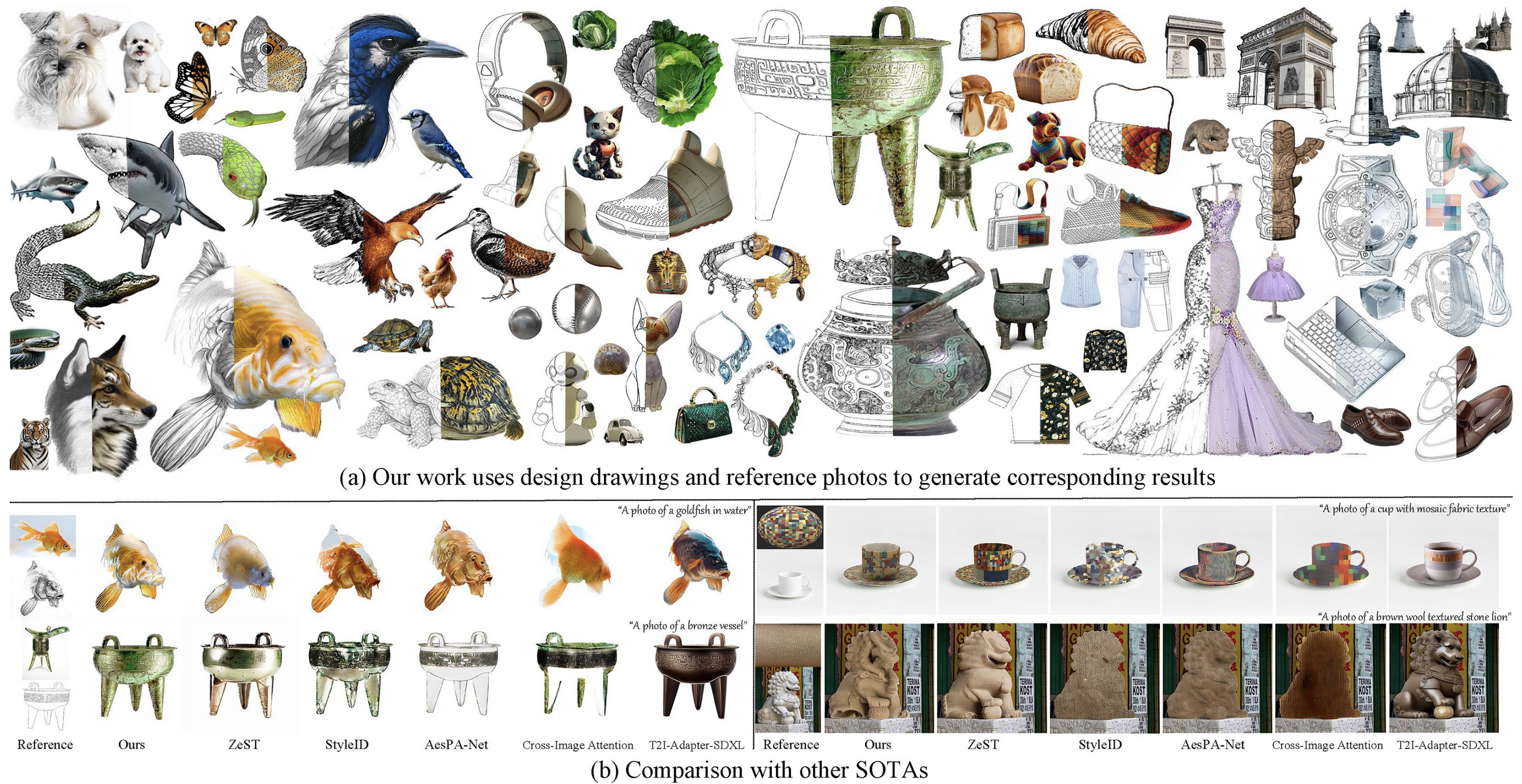

LineArt: A Knowledge-guided Training-free High-quality Appearance Transfer for Design Drawing with Diffusion ModelXi Wang, Hongzhen Li, Heng Fang, Yichen Peng, Haoran Xie, Xi Yang, Chuntao Li Proceedings of the Computer Vision and Pattern Recognition Conference (CVPR2025), 2025 paper / code / datasets / |

|

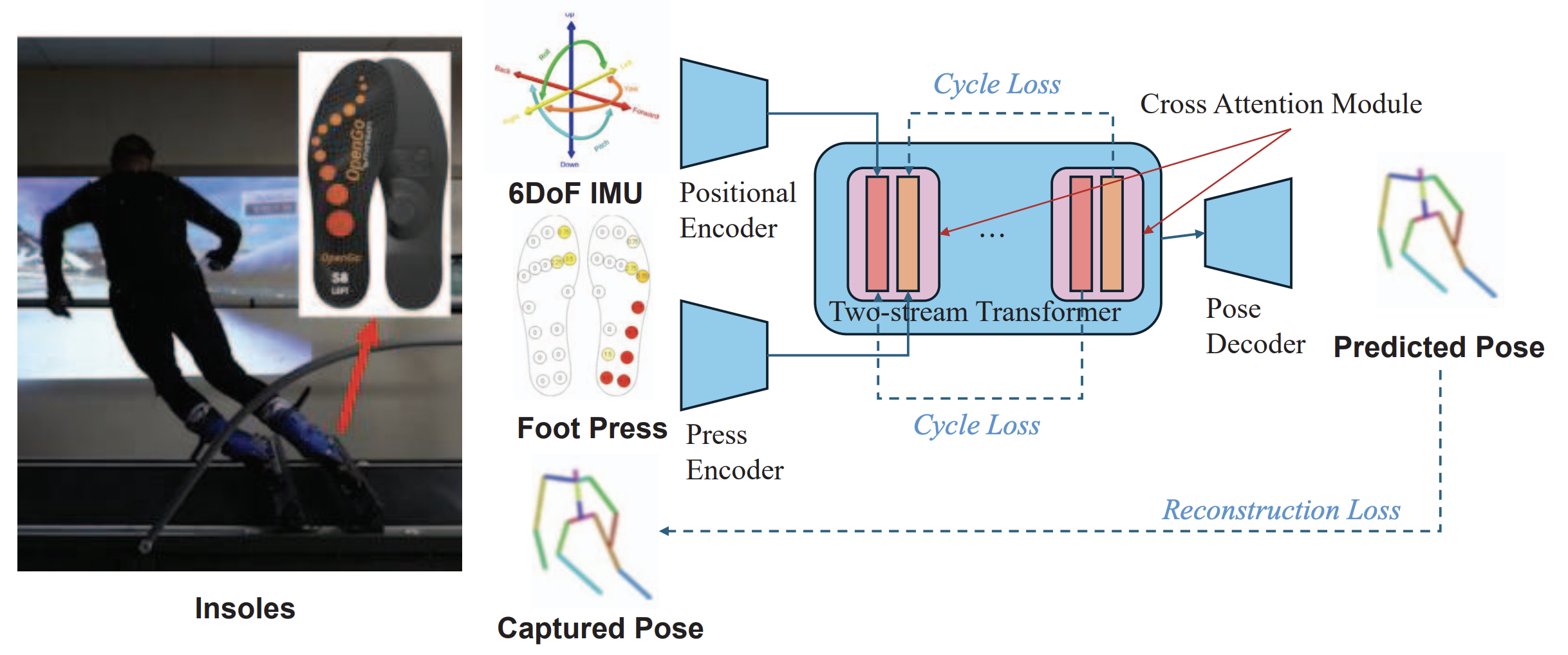

Dual-modal 3d human pose estimation using insole foot pressure sensorsErwin Wu, Yichen Peng, Rawal Khirodkar, Hideki Koike, Kris Kitani 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR 2024 workshop), 2024 paper / code / datasets / |

|

Emage: Towards unified holistic co-speech gesture generation via expressive masked audio gesture modelingHaiyang Liu, Zihao Zhu, Giorgio Becherini, Yichen Peng, Mingyang Su, You Zhou, Xuefei Zhe, Naoya Iwamoto, Bo Zheng, Michael J Black Proceedings of the Computer Vision and Pattern Recognition Conference (CVPR2024), 2024 paper / code / datasets / |

|

Beat: A large-scale semantic and emotional multi-modal dataset for conversational gestures synthesisHaiyang Liu, Zihao Zhu, Naoya Iwamoto, Yichen Peng,, Zhengqing Li, You Zhou, Elif Bozkurt, Bo Zheng European conference on computer vision (ECCV2022), 2024 paper / code / datasets / |

|

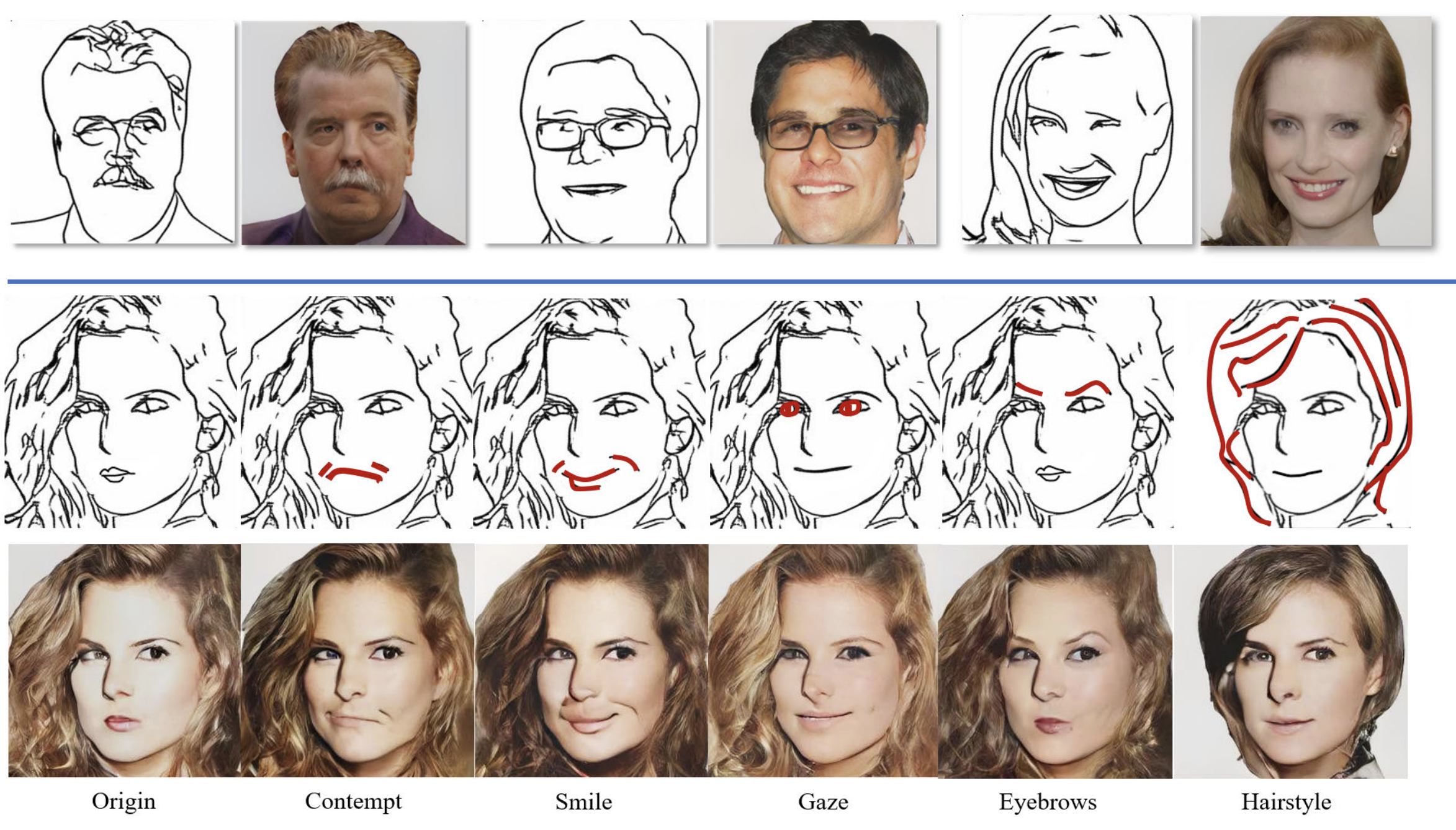

DiffFaceSketch: Sketch-guided latent diffusion model for high-fidelity face image synthesisYichen Peng, Chunqi Zhao, Haoran Xie, Tsukasa Fukusato, Kazunori Miyata IEEE Access 2023, 2023 paper / code / |

|

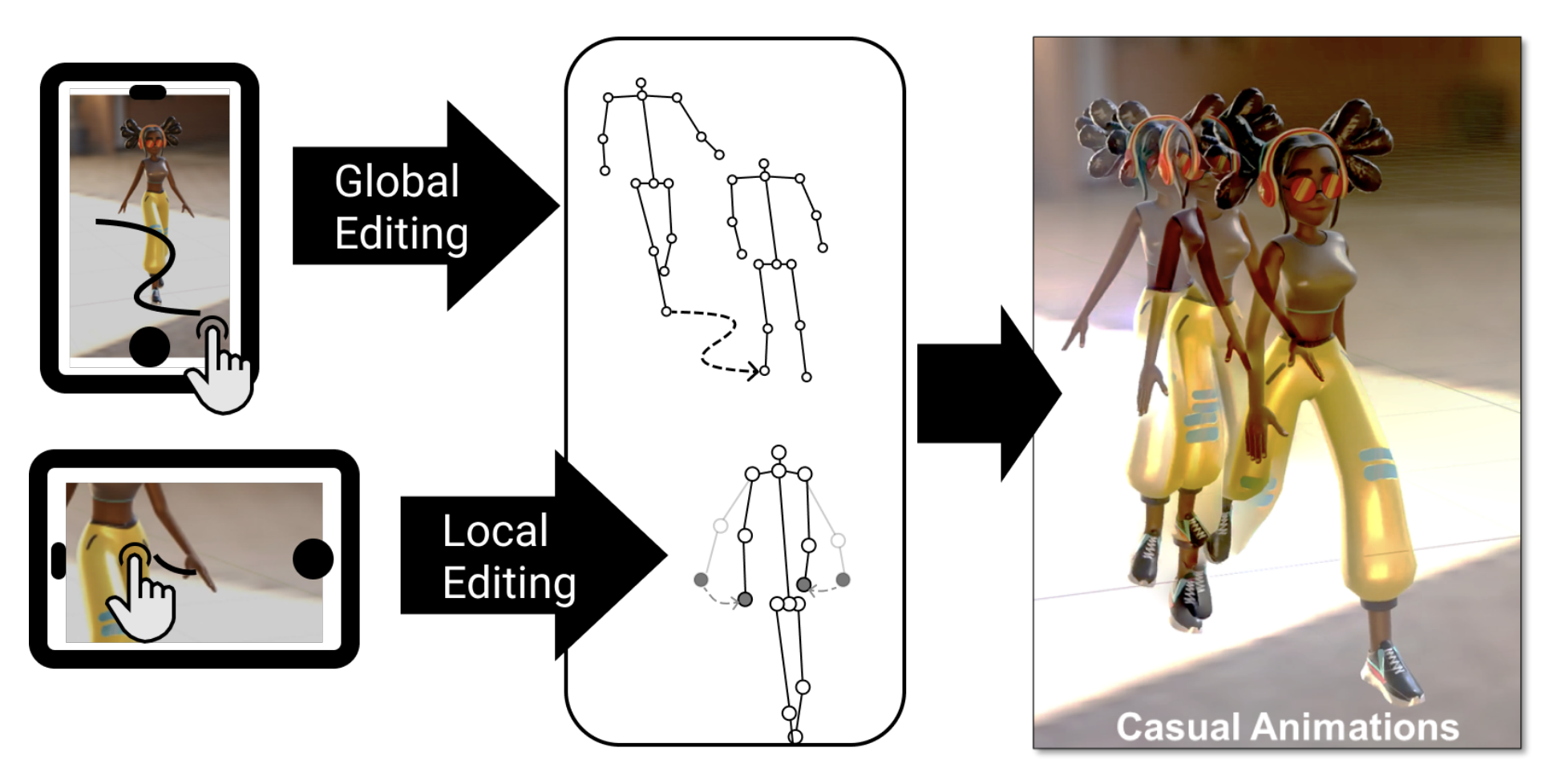

Dualmotion: Global-to-local casual motion design for character animationsYichen Peng, Chunqi Zhao, Haoran Xie, Tsukasa Fukusato, Kazunori Miyata, Takeo Igarashi IEICE TRANSACTIONS on Information and Systems (IEICE 2023), 2023 paper / |

|

Design and source code from Jon Barron's website |